Word2vec basic.py: Difference between revisions

From Algolit

| (16 intermediate revisions by 2 users not shown) | |||

| Line 4: | Line 4: | ||

| Type: || Algolit extension | | Type: || Algolit extension | ||

|- | |- | ||

| − | | Datasets: || [[ | + | | Datasets: || [[NearbySaussure|nearbySaussre]] |

|- | |- | ||

| Technique: || [[word embeddings]] | | Technique: || [[word embeddings]] | ||

| Line 11: | Line 11: | ||

|} | |} | ||

| − | + | This is an annotated version of the basic word2vec script. The code is based on [https://www.tensorflow.org/tutorials/word2vec this Word2Vec tutorial] provided by Tensorflow. | |

| − | |||

| − | |||

| − | This is an annotated version of the basic word2vec script. The code is based on [https://www.tensorflow.org/tutorials/word2vec this Word2Vec tutorial] provided by Tensorflow. | ||

==History== | ==History== | ||

| − | Word2vec consists of related models used to generate vectors from words (also called [[word embeddings]]). It is a two-layer neural network, produced by a team of researchers led by [http://papers.nips.cc/paper/5021-distributed-representations-of-words-and-phrases-and-their-compositionality.pdf Tomas Mikolov at Google]. | + | Word2vec consists of related models used to generate vectors from words (also called [[word embeddings]]). It is a two-layer neural network, produced by a team of researchers led by [http://papers.nips.cc/paper/5021-distributed-representations-of-words-and-phrases-and-their-compositionality.pdf Tomas Mikolov at Google]. The script that we use here is not the original version of word2vec. The original project is written in the programming language C, which made us look for a version of the script written in the programming language Python. Another Python implementation of word2vec is provided by [https://radimrehurek.com/gensim/models/word2vec.html Gensim]. |

==word2vec_basic_algolit.py== | ==word2vec_basic_algolit.py== | ||

| + | [[File:Word-embeddings-steps-algoliterary-encounter.JPG|thumb|right|Each table is occupied with one of the multiple steps of the script word2vec_basic.py. Picture taken during the Algoliterary Encounter event in November 2017.]] | ||

| + | |||

The structure of the annotated word2vec script is the following: | The structure of the annotated word2vec script is the following: | ||

| Line 42: | Line 41: | ||

* Step 6: Visualize the embeddings. | * Step 6: Visualize the embeddings. | ||

** '''Algolit adaption''': select 3 words to be included in the graph | ** '''Algolit adaption''': select 3 words to be included in the graph | ||

| + | |||

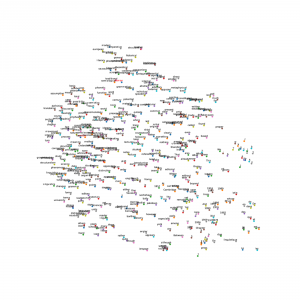

| + | [[File:5 graphs nearbySaussure.png|thumb|right|Graph generated by the word2vec_basic.py Tensorflow tutorial, based on the [[NearbySaussure|nearbySaussre]] dataset.]] | ||

===Source=== | ===Source=== | ||

The script word2vec_basic.py provides an option to download a dataset from [http://mattmahoney.net/dc/text8.zip Matt Mahoney's home page]. It turns out to be a plain text document, without any punctuation or line breaks. | The script word2vec_basic.py provides an option to download a dataset from [http://mattmahoney.net/dc/text8.zip Matt Mahoney's home page]. It turns out to be a plain text document, without any punctuation or line breaks. | ||

| − | For the tests that we wanted to do with the script, we decided to work with | + | For the tests that we wanted to do with the script, we decided to work with an algoliterary dataset that circles around the structuralist linguistic theory of Ferdinand de Saussure: [[NearbySaussure|nearbySaussure]]. The dataset contains 424.811 words in total of which 24.651 words are unique. |

| − | Before we could use | + | Before we could use the three books that form this dataset as training material, we needed to remove all the punctuation from the file. To do this, we wrote a small python script [[text-punctuation-clean-up.py]]. The script saves a *stripped* version of the original book under another filename. |

| − | |||

| − | |||

===wordlist.txt=== | ===wordlist.txt=== | ||

From continuous text to list of words, exported as wordlist.txt. | From continuous text to list of words, exported as wordlist.txt. | ||

| − | [' | + | <pre> |

| + | [u'Introduction', u'saussure', u'today', u'Carol', u'sanders', u'Why', u'still', u'today', u'do', u'we', u'\ufb01nd', u'the', u'name', u'of', u'ferdinand', u'de', u'saussure', u'featuring', u'prominently', u'in', u'volumes', u'published', u'not', u'only', u'on', u'linguistics', u'but', u'on', u'a', u'multitude', u'of', u'topics', ... ] | ||

| + | </pre> | ||

===counted.txt=== | ===counted.txt=== | ||

From list of words to a list with the structure [(word, value)], exported as counted.txt. | From list of words to a list with the structure [(word, value)], exported as counted.txt. | ||

| − | + | <pre> | |

| + | Counter({u'the': 22315, u'of': 16396, u'and': 8271, u'a': 8246, u'to': 7797, u'in': 7314, u'is': 5983, u'as': 4143, u'that': 3586, u'it': 2629, u'e': 2500, u'The': 2478, u's': 2332, u'language': 2281, u'saussure': 2201, u'which': 2101, u'by': 1962, u'this': 1944, u'on': 1937, u'be': 1808, u'or': 1751, u'r': 1713, u'not': 1689, u'an': 1680, ... }) | ||

| + | </pre> | ||

===dictionary.txt=== | ===dictionary.txt=== | ||

Reversed dictionary, a list of the 5000 (=vocabulary size) most common words, accompanied by an index number, exported as dictionary.txt. | Reversed dictionary, a list of the 5000 (=vocabulary size) most common words, accompanied by an index number, exported as dictionary.txt. | ||

| − | {0: 'UNK', 1: 'the', 2: 'of', 3: 'and', 4: ' | + | <pre> |

| + | {0: 'UNK', 1: u'the', 2: u'of', 3: u'and', 4: u'a', 5: u'to', 6: u'in', 7: u'is', 8: u'as', 9: u'that', 10: u'it', 11: u'e', 12: u'The', 13: u's', 14: u'language', 15: u'saussure', 16: u'which', 17: u'by', 18: u'this', 19: u'on', 20: u'be', 21: u'or', 22: u'r', 23: u'not', 24: u'an', ... } | ||

| + | </pre> | ||

===data.txt=== | ===data.txt=== | ||

The object ''data'' is created, the original texts where words are replaced with index numbers, exported as data.txt. | The object ''data'' is created, the original texts where words are replaced with index numbers, exported as data.txt. | ||

| − | [ | + | <pre> |

| + | [1169, 15, 1289, 3020, 1427, 3697, 354, 1289, 269, 68, 1021, 1, 345, 2, 234, 34, 15, 4416, 0, 6, 3052, 293, 23, 64, 19, 31, 38, 19, 4, 0, 2, 3877, ... ] | ||

| + | </pre> | ||

===disregarded.txt=== | ===disregarded.txt=== | ||

List of disregarded words, that fall outside the vocabulary size, exported as disregarded.txt. | List of disregarded words, that fall outside the vocabulary size, exported as disregarded.txt. | ||

| − | [' | + | <pre> |

| + | [u'prominently', u'multitude', u'Volumes', u'titles', u'lee', u'poynton', u'intriguing', u'Plastic', u'glasses', u'fathers', u'kronenfeld', u'Afresh', u'Impact', u'titles', u'excite', u'premature', u'\u2018course', u'Sole', u'brilliant', u'precocious', u'centuries', u'examines', u'tracing', u'barely', u'praise', ... ] | ||

| + | </pre> | ||

===reversed-input.txt=== | ===reversed-input.txt=== | ||

Reversed version of the initial dataset, where all the disregard words are replaced with ''UNK'' (unkown), exported as reversed-input.txt. | Reversed version of the initial dataset, where all the disregard words are replaced with ''UNK'' (unkown), exported as reversed-input.txt. | ||

| − | + | <pre> | |

| + | Introduction saussure today Carol sanders Why still today do we find the name of ferdinand de saussure featuring UNK in volumes published not only on linguistics but on a UNK of topics UNK with UNK such as culture and text discourse and methodology in Social research and cultural studies UNK and UNK 2000 or the UNK UNK UNK and church UNK UNK 1996 ... | ||

| + | </pre> | ||

===big-random-matrix.txt=== | ===big-random-matrix.txt=== | ||

A big random matrix is created, with a vector size of 5000x20, exported as big-random-matrix.txt. | A big random matrix is created, with a vector size of 5000x20, exported as big-random-matrix.txt. | ||

| − | [[ | + | <pre> |

| − | 6. | + | [[ 7.91555882e-01 4.78600025e-01 -7.13676214e-01 2.30826855e-01 |

| − | 7. | + | 6.61124229e-01 2.52689123e-01 6.37347698e-02 2.63915062e-01 |

| − | + | 7.84061432e-01 6.69055700e-01 3.71650457e-01 -3.47790241e-01 | |

| − | - | + | -4.34857845e-01 -9.00017262e-01 5.75044394e-01 -2.66819954e-01 |

| − | + | 2.29521990e-01 -1.87541008e-01 7.47018099e-01 -8.54661465e-01] | |

| − | + | [ 1.86723471e-01 -5.84969044e-01 -7.00650215e-01 7.50902653e-01 | |

| − | + | 2.52289057e-01 -9.68446016e-01 -1.12547159e-01 -9.01058912e-01 | |

| − | + | -5.95885992e-01 3.08442831e-01 3.84899616e-01 7.09214926e-01 | |

| − | + | 9.58799362e-01 -8.78485441e-01 -3.27231169e-01 6.92137718e-01 | |

| − | + | 8.31190109e-01 1.67458773e-01 2.05923319e-01 -8.14627409e-01] | |

| − | + | [ -6.24799252e-01 9.01598454e-01 7.46447325e-01 5.45922041e-01 | |

| − | + | 4.28986549e-02 -2.75697231e-01 5.12938023e-01 -4.38443661e-01 | |

| − | + | 7.13398457e-01 -9.77021456e-01 -6.00349426e-01 -1.46302462e-01 | |

| − | + | -9.75251198e-02 -1.80129766e-01 4.47291374e-01 -9.00330782e-01 | |

| − | [ | + | 8.20701122e-02 9.37094688e-01 -8.20110321e-01 -7.58672953e-01] ... ] |

| − | + | </pre> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

===training-words.txt=== | ===training-words.txt=== | ||

Export a training batch of 64 words, with a vector size of 128x20, exported as training-words.txt. | Export a training batch of 64 words, with a vector size of 128x20, exported as training-words.txt. | ||

| − | + | <pre> | |

| − | + | [ 323 323 52 52 107 107 2984 2984 3 3 1092 1092 48 48 4 | |

| − | + | 4 0 0 2898 2898 89 89 66 66 20 20 28 28 0 0 | |

| − | + | 4 4 0 0 142 142 28 28 0 0 0 0 173 173 697 | |

| − | + | 697 1054 1054 133 133 0 0 0 0 13 13 4364 4364 1146 1146 | |

| − | + | 2 2 1 1 201 201 2 2 1432 1432 26 26 12 12 201 | |

| − | + | 201 2 2 219 219 5 5 813 813 290 290 0 0 3071 3071 | |

| − | + | 5 5 1 1 280 280 2485 2485 705 705 6 6 144 144 28 | |

| − | + | 28 4 4 1125 1125 2 2 301 301 9 9 7 7 2851 2851 | |

| + | 6 6 16 16 0 0 3574 3574] | ||

| + | </pre> | ||

| − | + | Or in words: | |

| − | [' | + | <pre> |

| + | ['One', 'One', 'can', 'can', 'then', 'then', 'enter', 'enter', 'and', 'and', 'remain', 'remain', 'In', 'In', 'a', 'a', 'UNK', 'UNK', 'synchronics', 'synchronics', 'This', 'This', 'would', 'would', 'be', 'be', 'for', 'for', 'UNK', 'UNK', 'a', 'a', 'UNK', 'UNK', 'distinction', 'distinction', 'for', 'for', 'UNK', 'UNK', 'UNK', 'UNK', 'historical', 'historical', 'questions', 'questions', 'somewhat', 'somewhat', 'like', 'like', 'UNK', 'UNK', 'UNK', 'UNK', 's', 's', 'separating', 'separating', 'off', 'off', 'of', 'of', 'the', 'the', 'book', 'book', 'of', 'of', 'god', 'god', 'from', 'from', 'The', 'The', 'book', 'book', 'of', 'of', 'nature', 'nature', 'to', 'to', 'give', 'give', 'himself', 'himself', 'UNK', 'UNK', 'Access', 'Access', 'to', 'to', 'the', 'the', 'latter', 'latter', 'eagleton', 'eagleton', 'argues', 'argues', 'in', 'in', 'fact', 'fact', 'for', 'for', 'a', 'a', 'Process', 'Process', 'of', 'of', 'reading', 'reading', 'that', 'that', 'is', 'is', 'dialectical', 'dialectical', 'in', 'in', 'which', 'which', 'UNK', 'UNK', 'undergo', 'undergo'] | ||

| + | </pre> | ||

===training-window-words.txt=== | ===training-window-words.txt=== | ||

Export a the 128 connected window words, one to the left, one to the right, with a vector size of 128x20, exported as training-window-words.txt. | Export a the 128 connected window words, one to the left, one to the right, with a vector size of 128x20, exported as training-window-words.txt. | ||

| − | + | <pre> | |

| + | [[0] [52] [107] [323] [2984] [52] [3] [107] [1092] [2984] [48] [3] [4] [1092] [48] [0] [2898] [4] [89] [0] [66] [2898] [20] [89] [66] [28] [20] [0] [28] [4] [0] [0] [4] [142] [28] [0] [142] [0] [28] [0] [173] [0] [0] [697] [1054] [173] [697] [133] [0] [1054] [133] [0] [0] [13] [4364] [0] [13] [1146] [4364] [2] [1146] [1] [201] [2] [1] [2] [1432] [201] [26] [2] [1432] [12] [26] [201] [12] [2] [219] [201] [5] [2] [813] [219] [290] [5] [0] [813] [290] [3071] [5] [0] [1] [3071] [5] [280] [2485] [1] [705] [280] [6] [2485] [144] [705] [28] [6] [4] [144] [1125] [28] [2] [4] [1125] [301] [9] [2] [7] [301] [9] [2851] [6] [7] [2851] [16] [0] [6] [3574] [16] [0] [4331]] | ||

| + | </pre> | ||

<br>Or in words: | <br>Or in words: | ||

| − | [' | + | <pre> |

| − | + | ['UNK', 'can', 'then', 'One', 'enter', 'can', 'and', 'then', 'remain', 'enter', 'In', 'and', 'a', 'remain', 'In', 'UNK', 'synchronics', 'a', 'This', 'UNK', 'would', 'synchronics', 'be', 'This', 'would', 'for', 'be', 'UNK', 'for', 'a', 'UNK', 'UNK', 'a', 'distinction', 'for', 'UNK', 'distinction', 'UNK', 'for', 'UNK', 'historical', 'UNK', 'UNK', 'questions', 'somewhat', 'historical', 'questions', 'like', 'UNK', 'somewhat', 'like', 'UNK', 'UNK', 's', 'separating', 'UNK', 's', 'off', 'separating', 'of', 'off', 'the', 'book', 'of', 'the', 'of', 'god', 'book', 'from', 'of', 'god', 'The', 'from', 'book', 'The', 'of', 'nature', 'book', 'to', 'of', 'give', 'nature', 'himself', 'to', 'UNK', 'give', 'himself', 'Access', 'to', 'UNK', 'the', 'Access', 'to', 'latter', 'eagleton', 'the', 'argues', 'latter', 'in', 'eagleton', 'fact', 'argues', 'for', 'in', 'a', 'fact', 'Process', 'for', 'of', 'a', 'Process', 'reading', 'that', 'of', 'is', 'reading', 'that', 'dialectical', 'in', 'is', 'dialectical', 'which', 'UNK', 'in', 'undergo', 'which', 'UNK', 'revision'] | |

| − | + | </pre> | |

| − | |||

| − | |||

| − | |||

===logfile.txt=== | ===logfile.txt=== | ||

Save training log, exported as logfile.txt. | Save training log, exported as logfile.txt. | ||

| − | < | + | <pre> |

| − | + | step: 60000 | |

| − | + | loss value: 5.90600517762 | |

| + | Nearest to human: physical, grammatical, empirical, social, Human, real, Linguistic, universal, Lacan, Public, | ||

| + | Nearest to system: System, theory, category, phenomenon, state, center, systems, collection, Psychology, Analogy, | ||

| − | + | step: 62000 | |

| − | + | loss value: 5.81202450609 | |

| − | + | Nearest to human: social, signifying, linguistic, coherent, universal, rationality, mental, empirical, Linguistic, grammatical, | |

| + | Nearest to system: state, structure, unit, consciousness, System, expression, center, phenomena, category, phenomenon, | ||

| − | + | step: 64000 | |

| − | + | loss value: 5.75922590137 | |

| − | < | + | Nearest to human: author, grammatical, Human, Public, physical, normative, ego, Sign, linguistic, arbitrary, |

| + | Nearest to system: System, metaphysics, changes, state, systems, knowledge, listener, unit, Understanding, language, | ||

| + | </pre> | ||

| − | + | [[Category:Algoliterary-Encounters]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 20:33, 4 January 2018

| Type: | Algolit extension |

| Datasets: | nearbySaussre |

| Technique: | word embeddings |

| Developed by: | a team of researchers led by Tomas Mikolov at Google, Claude Lévi-Strauss, Algolit |

This is an annotated version of the basic word2vec script. The code is based on this Word2Vec tutorial provided by Tensorflow.

History

Word2vec consists of related models used to generate vectors from words (also called word embeddings). It is a two-layer neural network, produced by a team of researchers led by Tomas Mikolov at Google. The script that we use here is not the original version of word2vec. The original project is written in the programming language C, which made us look for a version of the script written in the programming language Python. Another Python implementation of word2vec is provided by Gensim.

word2vec_basic_algolit.py

The structure of the annotated word2vec script is the following:

- Step 1: Download data.

- Algolit step 1: read data from plain text file

- Algolit inspection: wordlist.txt

- Step 2: Create a dictionary and replace rare words with UNK token.

- Algolit inspection: counted.txt

- Algolit inspection: dictionary.txt

- Algolit inspection: data.txt

- Algolit inspection: disregarded.txt

- Algolit adaption: reversed-input.txt

- Step 3: Function to generate a training batch for the skip-gram model

- Step 4: Build and train a skip-gram model.

- Algolit inspection: big-random-matrix.txt

- Algolit adaption: select your own set of test words

- Step 5: Begin training.

- Algolit inspection: training-words.txt

- Algolit inspection: training-window-words.txt

- Algolit adaption: visualisation of the cosine similarity calculation updates

- Algolit inspection: logfile.txt

- Step 6: Visualize the embeddings.

- Algolit adaption: select 3 words to be included in the graph

Source

The script word2vec_basic.py provides an option to download a dataset from Matt Mahoney's home page. It turns out to be a plain text document, without any punctuation or line breaks.

For the tests that we wanted to do with the script, we decided to work with an algoliterary dataset that circles around the structuralist linguistic theory of Ferdinand de Saussure: nearbySaussure. The dataset contains 424.811 words in total of which 24.651 words are unique.

Before we could use the three books that form this dataset as training material, we needed to remove all the punctuation from the file. To do this, we wrote a small python script text-punctuation-clean-up.py. The script saves a *stripped* version of the original book under another filename.

wordlist.txt

From continuous text to list of words, exported as wordlist.txt.

[u'Introduction', u'saussure', u'today', u'Carol', u'sanders', u'Why', u'still', u'today', u'do', u'we', u'\ufb01nd', u'the', u'name', u'of', u'ferdinand', u'de', u'saussure', u'featuring', u'prominently', u'in', u'volumes', u'published', u'not', u'only', u'on', u'linguistics', u'but', u'on', u'a', u'multitude', u'of', u'topics', ... ]

counted.txt

From list of words to a list with the structure [(word, value)], exported as counted.txt.

Counter({u'the': 22315, u'of': 16396, u'and': 8271, u'a': 8246, u'to': 7797, u'in': 7314, u'is': 5983, u'as': 4143, u'that': 3586, u'it': 2629, u'e': 2500, u'The': 2478, u's': 2332, u'language': 2281, u'saussure': 2201, u'which': 2101, u'by': 1962, u'this': 1944, u'on': 1937, u'be': 1808, u'or': 1751, u'r': 1713, u'not': 1689, u'an': 1680, ... })

dictionary.txt

Reversed dictionary, a list of the 5000 (=vocabulary size) most common words, accompanied by an index number, exported as dictionary.txt.

{0: 'UNK', 1: u'the', 2: u'of', 3: u'and', 4: u'a', 5: u'to', 6: u'in', 7: u'is', 8: u'as', 9: u'that', 10: u'it', 11: u'e', 12: u'The', 13: u's', 14: u'language', 15: u'saussure', 16: u'which', 17: u'by', 18: u'this', 19: u'on', 20: u'be', 21: u'or', 22: u'r', 23: u'not', 24: u'an', ... }

data.txt

The object data is created, the original texts where words are replaced with index numbers, exported as data.txt.

[1169, 15, 1289, 3020, 1427, 3697, 354, 1289, 269, 68, 1021, 1, 345, 2, 234, 34, 15, 4416, 0, 6, 3052, 293, 23, 64, 19, 31, 38, 19, 4, 0, 2, 3877, ... ]

disregarded.txt

List of disregarded words, that fall outside the vocabulary size, exported as disregarded.txt.

[u'prominently', u'multitude', u'Volumes', u'titles', u'lee', u'poynton', u'intriguing', u'Plastic', u'glasses', u'fathers', u'kronenfeld', u'Afresh', u'Impact', u'titles', u'excite', u'premature', u'\u2018course', u'Sole', u'brilliant', u'precocious', u'centuries', u'examines', u'tracing', u'barely', u'praise', ... ]

reversed-input.txt

Reversed version of the initial dataset, where all the disregard words are replaced with UNK (unkown), exported as reversed-input.txt.

Introduction saussure today Carol sanders Why still today do we find the name of ferdinand de saussure featuring UNK in volumes published not only on linguistics but on a UNK of topics UNK with UNK such as culture and text discourse and methodology in Social research and cultural studies UNK and UNK 2000 or the UNK UNK UNK and church UNK UNK 1996 ...

big-random-matrix.txt

A big random matrix is created, with a vector size of 5000x20, exported as big-random-matrix.txt.

[[ 7.91555882e-01 4.78600025e-01 -7.13676214e-01 2.30826855e-01

6.61124229e-01 2.52689123e-01 6.37347698e-02 2.63915062e-01

7.84061432e-01 6.69055700e-01 3.71650457e-01 -3.47790241e-01

-4.34857845e-01 -9.00017262e-01 5.75044394e-01 -2.66819954e-01

2.29521990e-01 -1.87541008e-01 7.47018099e-01 -8.54661465e-01]

[ 1.86723471e-01 -5.84969044e-01 -7.00650215e-01 7.50902653e-01

2.52289057e-01 -9.68446016e-01 -1.12547159e-01 -9.01058912e-01

-5.95885992e-01 3.08442831e-01 3.84899616e-01 7.09214926e-01

9.58799362e-01 -8.78485441e-01 -3.27231169e-01 6.92137718e-01

8.31190109e-01 1.67458773e-01 2.05923319e-01 -8.14627409e-01]

[ -6.24799252e-01 9.01598454e-01 7.46447325e-01 5.45922041e-01

4.28986549e-02 -2.75697231e-01 5.12938023e-01 -4.38443661e-01

7.13398457e-01 -9.77021456e-01 -6.00349426e-01 -1.46302462e-01

-9.75251198e-02 -1.80129766e-01 4.47291374e-01 -9.00330782e-01

8.20701122e-02 9.37094688e-01 -8.20110321e-01 -7.58672953e-01] ... ]

training-words.txt

Export a training batch of 64 words, with a vector size of 128x20, exported as training-words.txt.

[ 323 323 52 52 107 107 2984 2984 3 3 1092 1092 48 48 4

4 0 0 2898 2898 89 89 66 66 20 20 28 28 0 0

4 4 0 0 142 142 28 28 0 0 0 0 173 173 697

697 1054 1054 133 133 0 0 0 0 13 13 4364 4364 1146 1146

2 2 1 1 201 201 2 2 1432 1432 26 26 12 12 201

201 2 2 219 219 5 5 813 813 290 290 0 0 3071 3071

5 5 1 1 280 280 2485 2485 705 705 6 6 144 144 28

28 4 4 1125 1125 2 2 301 301 9 9 7 7 2851 2851

6 6 16 16 0 0 3574 3574]

Or in words:

['One', 'One', 'can', 'can', 'then', 'then', 'enter', 'enter', 'and', 'and', 'remain', 'remain', 'In', 'In', 'a', 'a', 'UNK', 'UNK', 'synchronics', 'synchronics', 'This', 'This', 'would', 'would', 'be', 'be', 'for', 'for', 'UNK', 'UNK', 'a', 'a', 'UNK', 'UNK', 'distinction', 'distinction', 'for', 'for', 'UNK', 'UNK', 'UNK', 'UNK', 'historical', 'historical', 'questions', 'questions', 'somewhat', 'somewhat', 'like', 'like', 'UNK', 'UNK', 'UNK', 'UNK', 's', 's', 'separating', 'separating', 'off', 'off', 'of', 'of', 'the', 'the', 'book', 'book', 'of', 'of', 'god', 'god', 'from', 'from', 'The', 'The', 'book', 'book', 'of', 'of', 'nature', 'nature', 'to', 'to', 'give', 'give', 'himself', 'himself', 'UNK', 'UNK', 'Access', 'Access', 'to', 'to', 'the', 'the', 'latter', 'latter', 'eagleton', 'eagleton', 'argues', 'argues', 'in', 'in', 'fact', 'fact', 'for', 'for', 'a', 'a', 'Process', 'Process', 'of', 'of', 'reading', 'reading', 'that', 'that', 'is', 'is', 'dialectical', 'dialectical', 'in', 'in', 'which', 'which', 'UNK', 'UNK', 'undergo', 'undergo']

training-window-words.txt

Export a the 128 connected window words, one to the left, one to the right, with a vector size of 128x20, exported as training-window-words.txt.

[[0] [52] [107] [323] [2984] [52] [3] [107] [1092] [2984] [48] [3] [4] [1092] [48] [0] [2898] [4] [89] [0] [66] [2898] [20] [89] [66] [28] [20] [0] [28] [4] [0] [0] [4] [142] [28] [0] [142] [0] [28] [0] [173] [0] [0] [697] [1054] [173] [697] [133] [0] [1054] [133] [0] [0] [13] [4364] [0] [13] [1146] [4364] [2] [1146] [1] [201] [2] [1] [2] [1432] [201] [26] [2] [1432] [12] [26] [201] [12] [2] [219] [201] [5] [2] [813] [219] [290] [5] [0] [813] [290] [3071] [5] [0] [1] [3071] [5] [280] [2485] [1] [705] [280] [6] [2485] [144] [705] [28] [6] [4] [144] [1125] [28] [2] [4] [1125] [301] [9] [2] [7] [301] [9] [2851] [6] [7] [2851] [16] [0] [6] [3574] [16] [0] [4331]]

Or in words:

['UNK', 'can', 'then', 'One', 'enter', 'can', 'and', 'then', 'remain', 'enter', 'In', 'and', 'a', 'remain', 'In', 'UNK', 'synchronics', 'a', 'This', 'UNK', 'would', 'synchronics', 'be', 'This', 'would', 'for', 'be', 'UNK', 'for', 'a', 'UNK', 'UNK', 'a', 'distinction', 'for', 'UNK', 'distinction', 'UNK', 'for', 'UNK', 'historical', 'UNK', 'UNK', 'questions', 'somewhat', 'historical', 'questions', 'like', 'UNK', 'somewhat', 'like', 'UNK', 'UNK', 's', 'separating', 'UNK', 's', 'off', 'separating', 'of', 'off', 'the', 'book', 'of', 'the', 'of', 'god', 'book', 'from', 'of', 'god', 'The', 'from', 'book', 'The', 'of', 'nature', 'book', 'to', 'of', 'give', 'nature', 'himself', 'to', 'UNK', 'give', 'himself', 'Access', 'to', 'UNK', 'the', 'Access', 'to', 'latter', 'eagleton', 'the', 'argues', 'latter', 'in', 'eagleton', 'fact', 'argues', 'for', 'in', 'a', 'fact', 'Process', 'for', 'of', 'a', 'Process', 'reading', 'that', 'of', 'is', 'reading', 'that', 'dialectical', 'in', 'is', 'dialectical', 'which', 'UNK', 'in', 'undergo', 'which', 'UNK', 'revision']

logfile.txt

Save training log, exported as logfile.txt.

step: 60000 loss value: 5.90600517762 Nearest to human: physical, grammatical, empirical, social, Human, real, Linguistic, universal, Lacan, Public, Nearest to system: System, theory, category, phenomenon, state, center, systems, collection, Psychology, Analogy, step: 62000 loss value: 5.81202450609 Nearest to human: social, signifying, linguistic, coherent, universal, rationality, mental, empirical, Linguistic, grammatical, Nearest to system: state, structure, unit, consciousness, System, expression, center, phenomena, category, phenomenon, step: 64000 loss value: 5.75922590137 Nearest to human: author, grammatical, Human, Public, physical, normative, ego, Sign, linguistic, arbitrary, Nearest to system: System, metaphysics, changes, state, systems, knowledge, listener, unit, Understanding, language,