Word2vec basic.py: Difference between revisions

From Algolit

| Line 6: | Line 6: | ||

=History= | =History= | ||

| − | Word2vec is a neural network | + | Word2vec consists of related models used to generate vectors from words (also called [[word embeddings]]). It is a two-layer neural network, produced by a team of researchers led by Tomas Mikolov at *Google*. |

=word2vec_basic_algolit.py= | =word2vec_basic_algolit.py= | ||

Revision as of 16:41, 3 October 2017

This is an annotated version of the basic word2vec script. The code is based on the Word2Vec tutorial provided by Tensorflow: https://www.tensorflow.org/tutorials/word2vec.

History

Word2vec consists of related models used to generate vectors from words (also called word embeddings). It is a two-layer neural network, produced by a team of researchers led by Tomas Mikolov at *Google*.

word2vec_basic_algolit.py

The structure of the annotated word2vec script is the following:

- Step 1: Download data. (optional)

- Algolit step 1: read data from plain text file

- Step 2: Create a dictionary and replace rare words with UNK token.

- Algolit extension: write the dictionary to dictionary.txt

- Step 3: Function to generate a training batch for the skip-gram model.

- Step 4: Build and train a skip-gram model.

- Algolit extension: select your own set of test words, using the dictionary.txt

- Step 5: Begin training.

- Algolit extension: write training log to logfile.txt

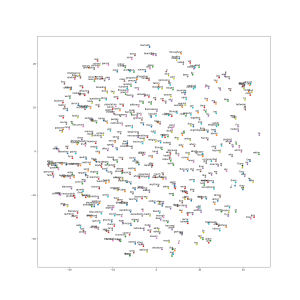

- Step 6: Visualize the embeddings.

Source

The script provides an option to download a dataset from

- original source in the script: http://mattmahoney.net/dc/text8.zip

- Mankind in the Making by H. G. Wells - http://www.gutenberg.org/ebooks/7058

Dictionary

A snippet from the dictionary.txt file:

0: 'UNK', 1: 'the', 2: 'of', 3: 'and', 4: 'to', 5: 'a', 6: 'in', 7: 'is', 8: 'that', 9: 'it', 10: 'be', 11: 'for', 12: 'as', 13: 'are', 14: 'with', 15: 'not', 16: 'this', 17: 'or', 18: 'will', 19: 'at', 20: 'we', 21: 'but', 22: 'by', 23: 'may', 24: 'his', 25: 'all', 26: 'an', 27: 'these', 28: 'they', 29: 'have', 30: 'he', 31: 'from', 32: 'our', 33: 'has', 34: 'The', 35: 'no', 36: 'more', 37: 'which', 38: 'one', 39: 'there', 40: 'would', 41: 'its', 42: 'so', 43: 'their', 44: 'than', 45: 'children', 46: 'very', 47: 'things', 48: 'any', 49: 'upon', 50: 'i', 51: 'can', 52: 'if', 53: 'do', 54: 'who', 55: 'child', 56: 'new', 57: 'life', 58: 'It', 59: 'should', 60: 'them', 61: 'only', 62: 'world', 63: 'must', 64: 'on', 65: 'such', 66: 'great', 67: 'people', 68: 'man', 69: 'into', 70: 'most', 71: 'out', 72: 'little', 73: 'what', 74: 'was', 75: 'every', 76: 'some', 77: 'much', 78: 'certain', 79: 'And', 80: 'about', 81: 'men', 82: 'english', 83: 'far', 84: 'present', 85: 'first', 86: 'many', 87: 'been', 88: 'thing', 89: 'those', 90: 'home', 91: 'good', 92: 'But', 93: 'quite', 94: 'way', 95: 'might', 96: 'other', 97: 'us', 98: 'general', 99: 'They', 100: 'social',