Word2vec basic.py: Difference between revisions

From Algolit

(→logfile.txt) |

|||

| Line 37: | Line 37: | ||

An example snippted from the logfile.txt: | An example snippted from the logfile.txt: | ||

| − | Nearest to education: criticism, family, statistics, varieties, sign, karl, manner, euphemism, concurrence, absurdity, | + | '''Nearest to education''': criticism, family, statistics, varieties, sign, karl, manner, euphemism, concurrence, absurdity, |

| − | Nearest to complex: love, ascribed, sadder, abundance, positivist, spin, subtlety, spectacle, heedless, number, | + | '''Nearest to complex''': love, ascribed, sadder, abundance, positivist, spin, subtlety, spectacle, heedless, number, |

| − | Nearest to beliefs: access, advantageous, bound, opens, determined, idle, bringing, binding, considered, unprotected, | + | '''Nearest to beliefs''': access, advantageous, bound, opens, determined, idle, bringing, binding, considered, unprotected, |

| − | Nearest to harmony: fumble, alienists, sketching, disaster, compete, survival, rule, textbooks, encumbered, dowries, | + | '''Nearest to harmony''': fumble, alienists, sketching, disaster, compete, survival, rule, textbooks, encumbered, dowries, |

| − | Nearest to uncivil: narrowchested, inferiors, pitiful, angry, beautifully, accentuate, petals, predisposition, individualistic, | + | '''Nearest to uncivil''': narrowchested, inferiors, pitiful, angry, beautifully, accentuate, petals, predisposition, individualistic, |

produced, | produced, | ||

Revision as of 16:59, 3 October 2017

This is an annotated version of the basic word2vec script. The code is based on this Word2Vec tutorial provided by Tensorflow.

History

Word2vec consists of related models used to generate vectors from words (also called word embeddings). It is a two-layer neural network, produced by a team of researchers led by Tomas Mikolov at *Google*.

word2vec_basic_algolit.py

The structure of the annotated word2vec script is the following:

- Step 1: Download data.

- Algolit step 1: read data from plain text file

- Step 2: Create a dictionary and replace rare words with UNK token.

- Algolit adaptation: write the dictionary to dictionary.txt

- Step 3: Function to generate a training batch for the skip-gram model.

- Step 4: Build and train a skip-gram model.

- Algolit adaptation: select your own set of test words, using the dictionary.txt

- Step 5: Begin training.

- Algolit adaptation: write training log to logfile.txt

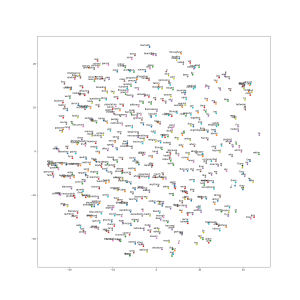

- Step 6: Visualize the embeddings.

Source

The script word2vec_basic.py provides an option to download a dataset from Matt Mahoney's home page. It turns out to be a plain text document, without any punctuation or line breaks.

For the tests that we wanted to do with the script, we decided to work with a piece of literature instead. As we would like to share and publish our code and training data, we picked a book that is in the public domain: Mankind in the Making, written by H. G. Wells (downloaded from the Gutenberg archive website).

Before we could use Wells' text as training material, we needed to remove all the punctuation from the file. To do this, we wrote a small python script text-punctuation-clean-up.py. The script saves a *stripped* version of the original book under another filename.

dictionary.txt

A snippet from the dictionary.txt file:

0: 'UNK', 1: 'the', 2: 'of', 3: 'and', 4: 'to', 5: 'a', 6: 'in', 7: 'is', 8: 'that', 9: 'it', 10: 'be', 11: 'for', 12: 'as', 13: 'are', 14: 'with', 15: 'not', 16: 'this', 17: 'or', 18: 'will', 19: 'at', 20: 'we', 21: 'but', 22: 'by', 23: 'may', 24: 'his', 25: 'all', 26: 'an', 27: 'these', 28: 'they', 29: 'have', 30: 'he', 31: 'from', 32: 'our', 33: 'has', 34: 'The', 35: 'no', 36: 'more', 37: 'which', 38: 'one', 39: 'there', 40: 'would', 41: 'its', 42: 'so', 43: 'their', 44: 'than', 45: 'children', 46: 'very', 47: 'things', 48: 'any', 49: 'upon', 50: 'i', 51: 'can', 52: 'if', 53: 'do', 54: 'who', 55: 'child', 56: 'new', 57: 'life', 58: 'It', 59: 'should', 60: 'them', 61: 'only', 62: 'world', 63: 'must', 64: 'on', 65: 'such', 66: 'great', 67: 'people', 68: 'man', 69: 'into', 70: 'most', 71: 'out', 72: 'little', 73: 'what', 74: 'was', 75: 'every', 76: 'some', 77: 'much', 78: 'certain', 79: 'And', 80: 'about', 81: 'men', 82: 'english', 83: 'far', 84: 'present', 85: 'first', 86: 'many', 87: 'been', 88: 'thing', 89: 'those', 90: 'home', 91: 'good', 92: 'But', 93: 'quite', 94: 'way', 95: 'might', 96: 'other', 97: 'us', 98: 'general', 99: 'They', 100: 'social',

logfile.txt

An example snippted from the logfile.txt:

Nearest to education: criticism, family, statistics, varieties, sign, karl, manner, euphemism, concurrence, absurdity,

Nearest to complex: love, ascribed, sadder, abundance, positivist, spin, subtlety, spectacle, heedless, number,

Nearest to beliefs: access, advantageous, bound, opens, determined, idle, bringing, binding, considered, unprotected,

Nearest to harmony: fumble, alienists, sketching, disaster, compete, survival, rule, textbooks, encumbered, dowries,

Nearest to uncivil: narrowchested, inferiors, pitiful, angry, beautifully, accentuate, petals, predisposition, individualistic, produced,